Types of Caching

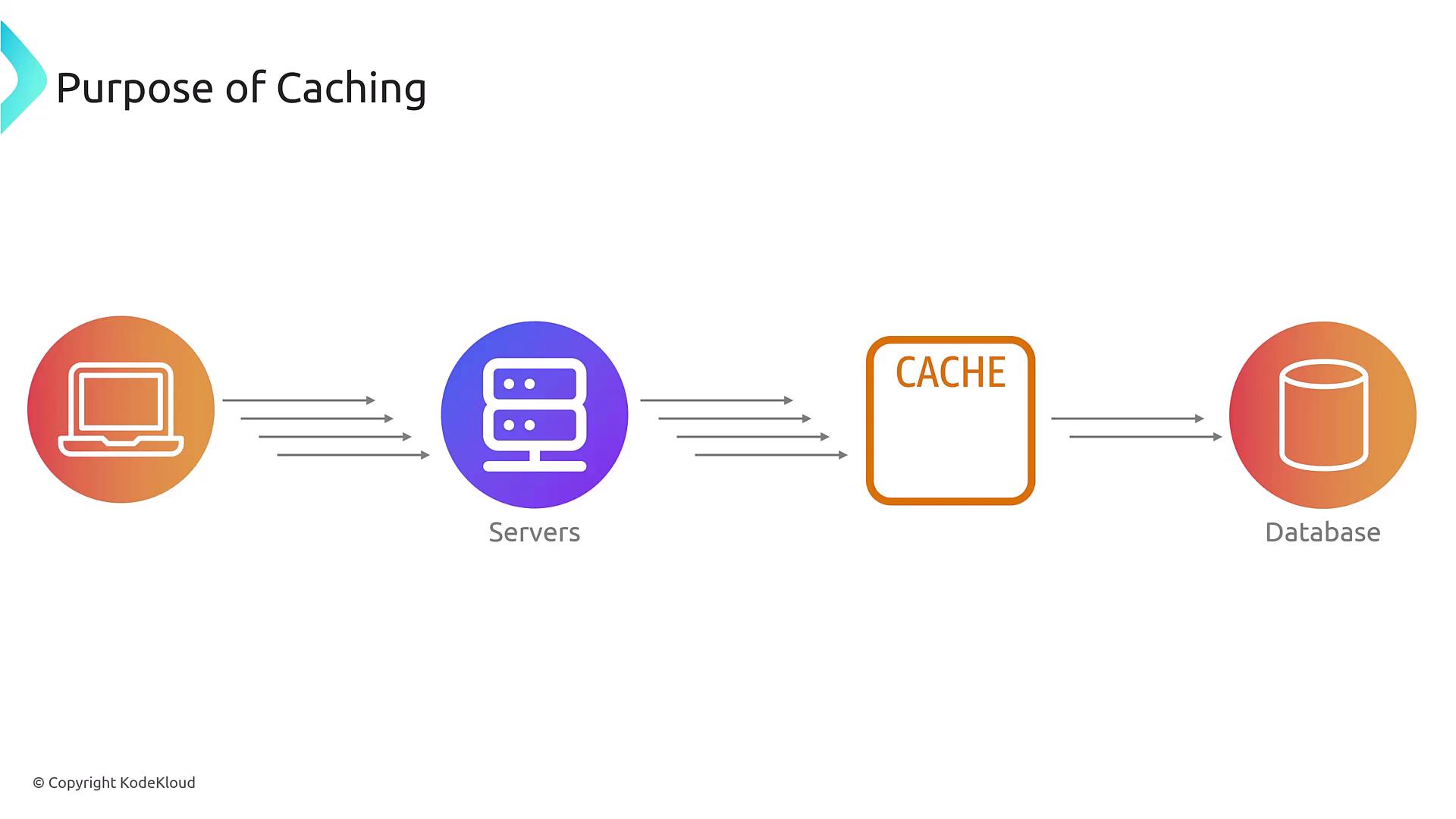

There are several types of caching used in modern applications:- Database Caching: Stores frequent database queries.

- Content Caching: Saves web pages, images, videos, and PDF files.

- Application Caching: Caches application-level data, such as session information or API responses.

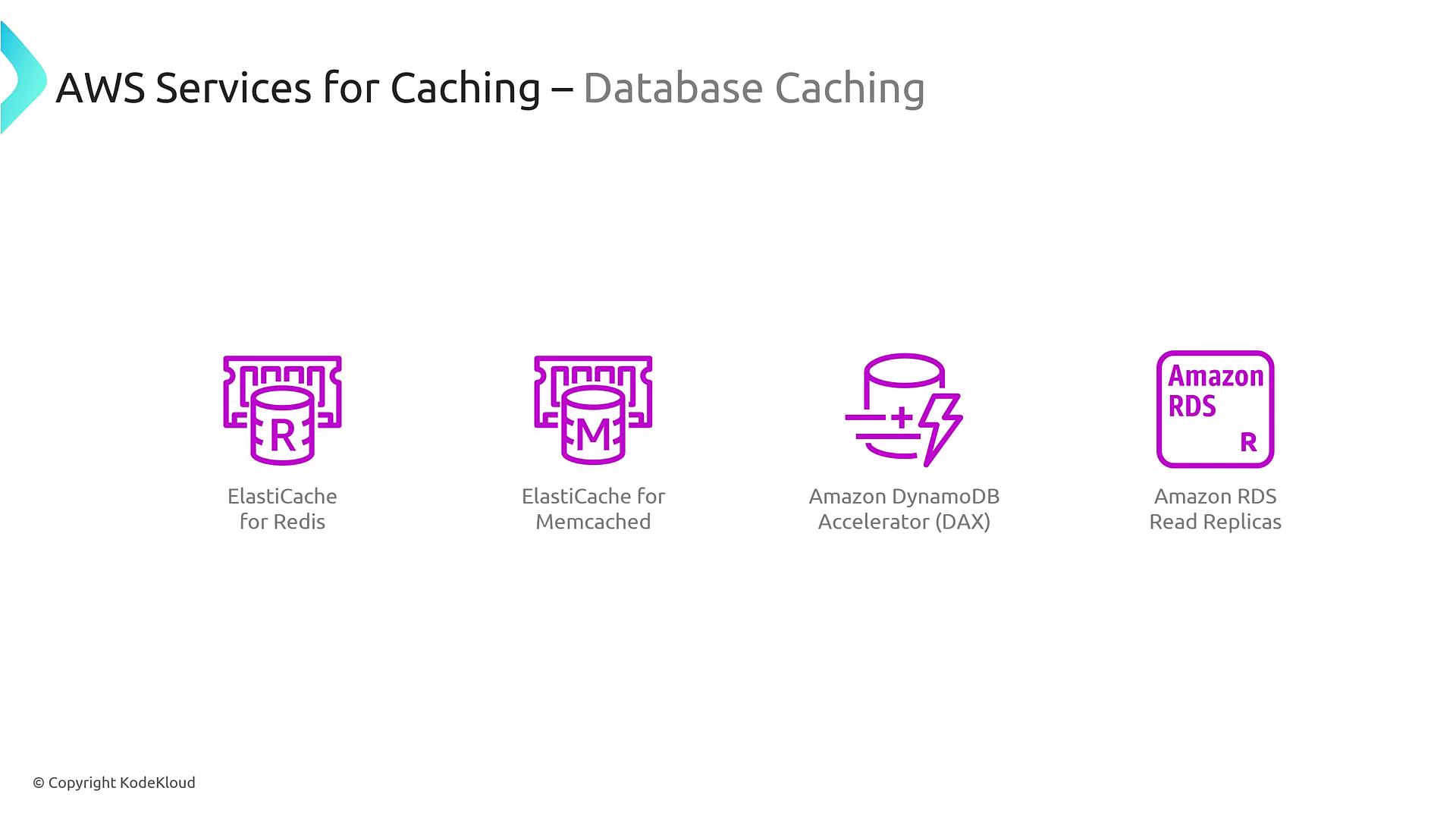

- ElastiCache: Available with Redis or Memcached flavors.

- Amazon DynamoDB Accelerator (DAX): Provides microsecond response times for DynamoDB.

- RDS Read Replicas: Though not in-memory, they offer a form of caching. However, Redis typically delivers faster performance.

Caching Strategies

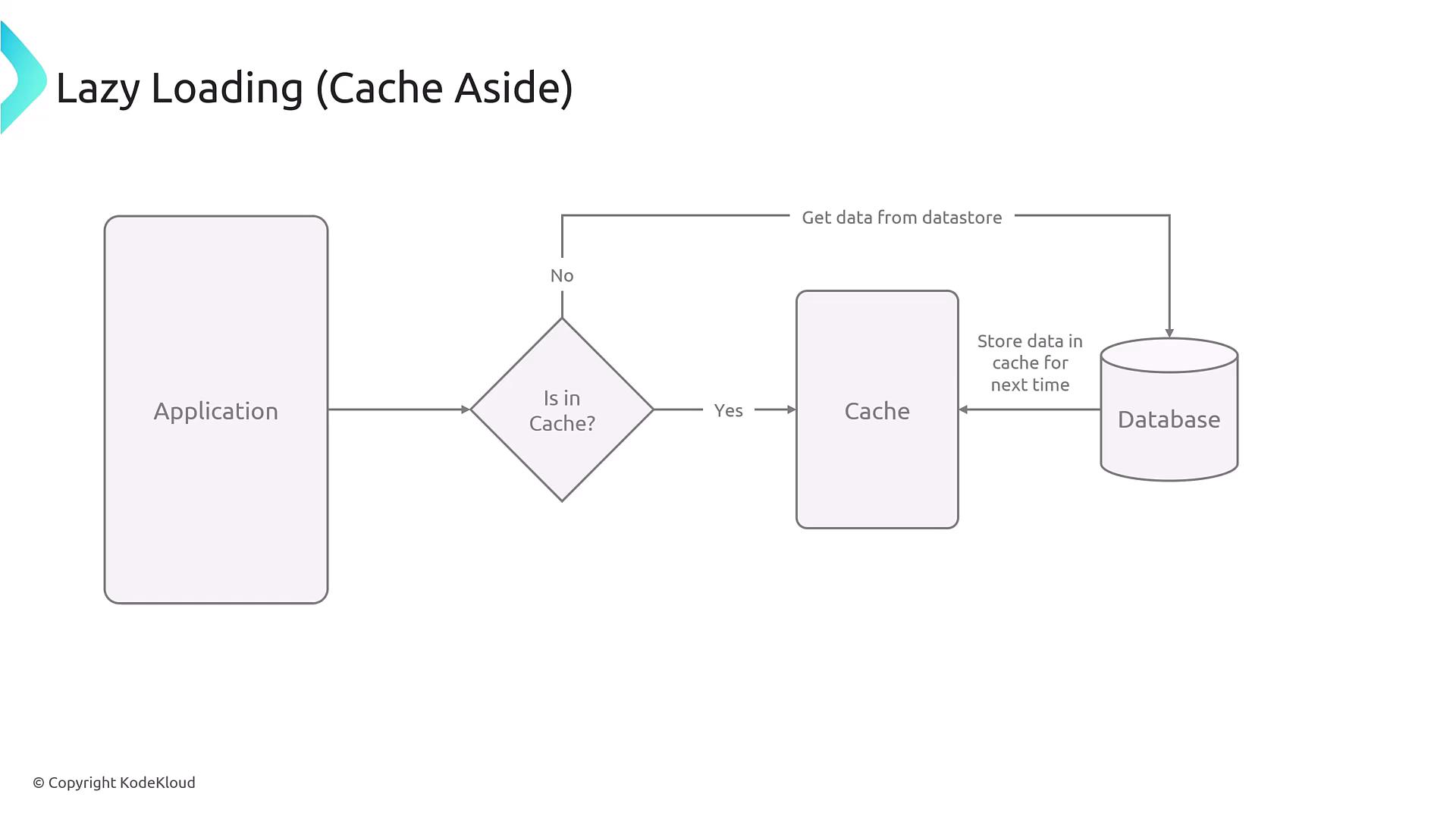

When implementing caching for databases, consider the following commonly used strategies:1. Lazy Loading (Cache Aside)

Lazy loading, also known as cache aside, involves loading data into the cache only after a cache miss occurs. In practice, the application first queries the cache. If the data is absent, it then fetches from the database, returns the data to the user, and saves a copy in the cache for future requests.

Cache systems like Redis or Memcached do not automatically synchronize with the database; your application must explicitly manage this process.

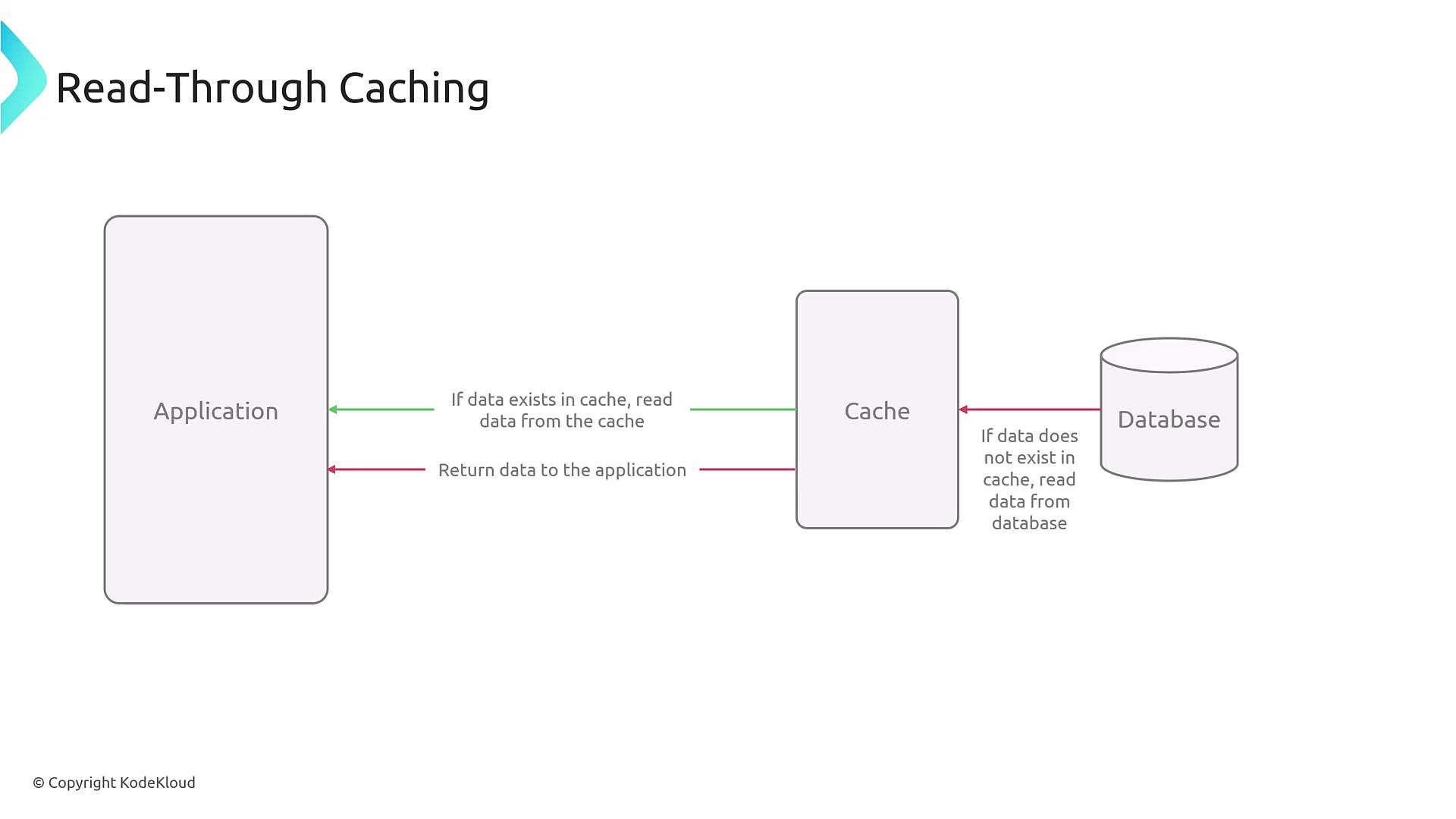

2. Read-Through Caching

In read-through caching, the application requests data through the cache interface. If the cache does not contain the data, the cache itself retrieves the data from the database before returning it. Although this model simplifies the application logic by centralizing data access, it is less commonly enabled by default in many in-memory databases without extra configuration.

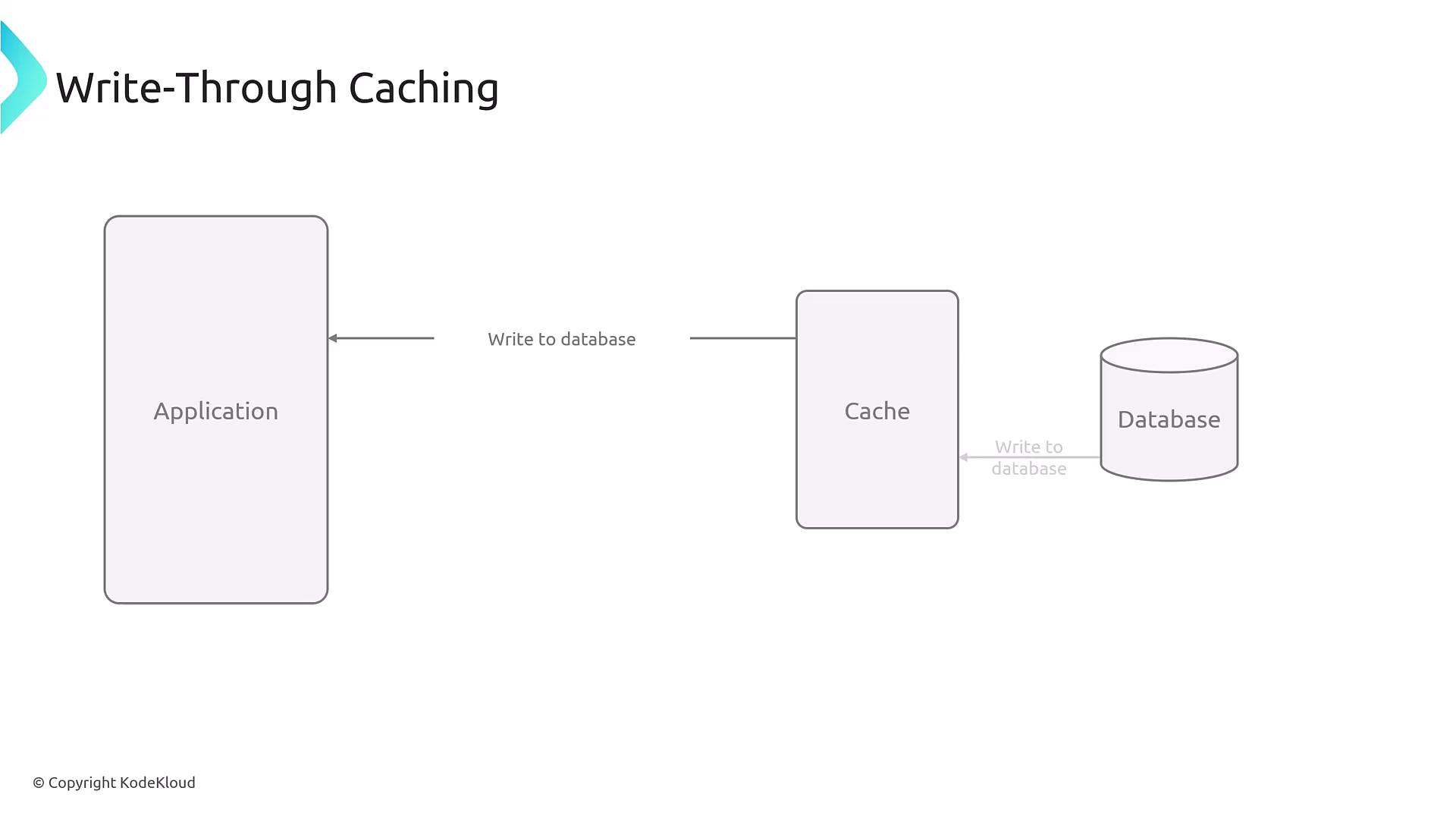

3. Write-Through Caching

Write-through caching updates both the cache and the database simultaneously whenever the application writes data. This method ensures data consistency and minimizes the risk of serving outdated information.

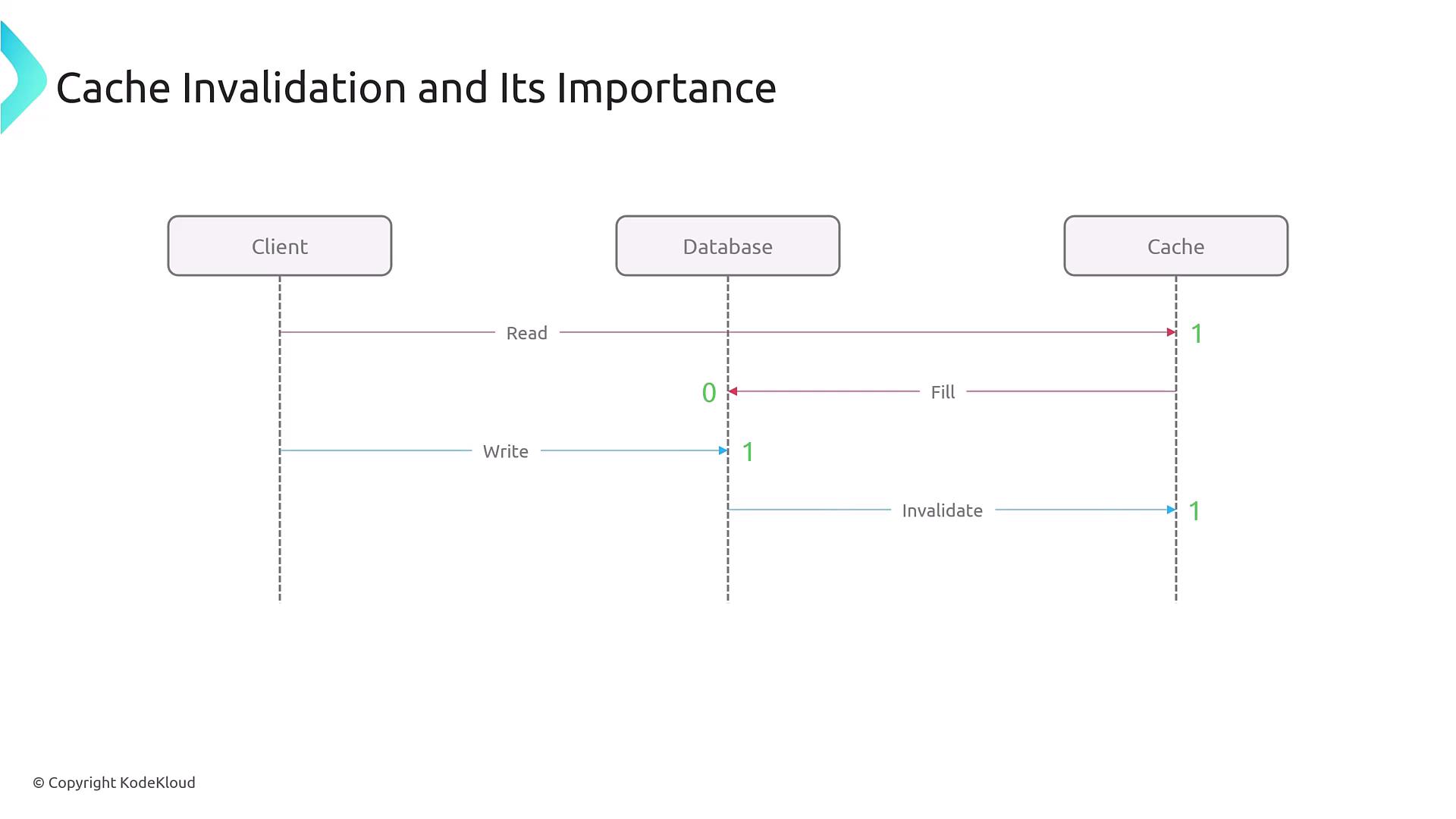

Cache Invalidation

Keeping the cached data fresh is critical for application accuracy and performance. Cache invalidation involves updating or removing stale cache entries. When outdated data is detected, the application may either compare it with the database or trigger a specific business logic to refresh the cache.

- Event-Based Invalidation: Directly invalidates cache entries immediately after a database update.

- Time-Based Invalidation: Uses a predetermined Time-To-Live (TTL) for cached data, after which the data is removed and refreshed on subsequent requests.

Cache Eviction

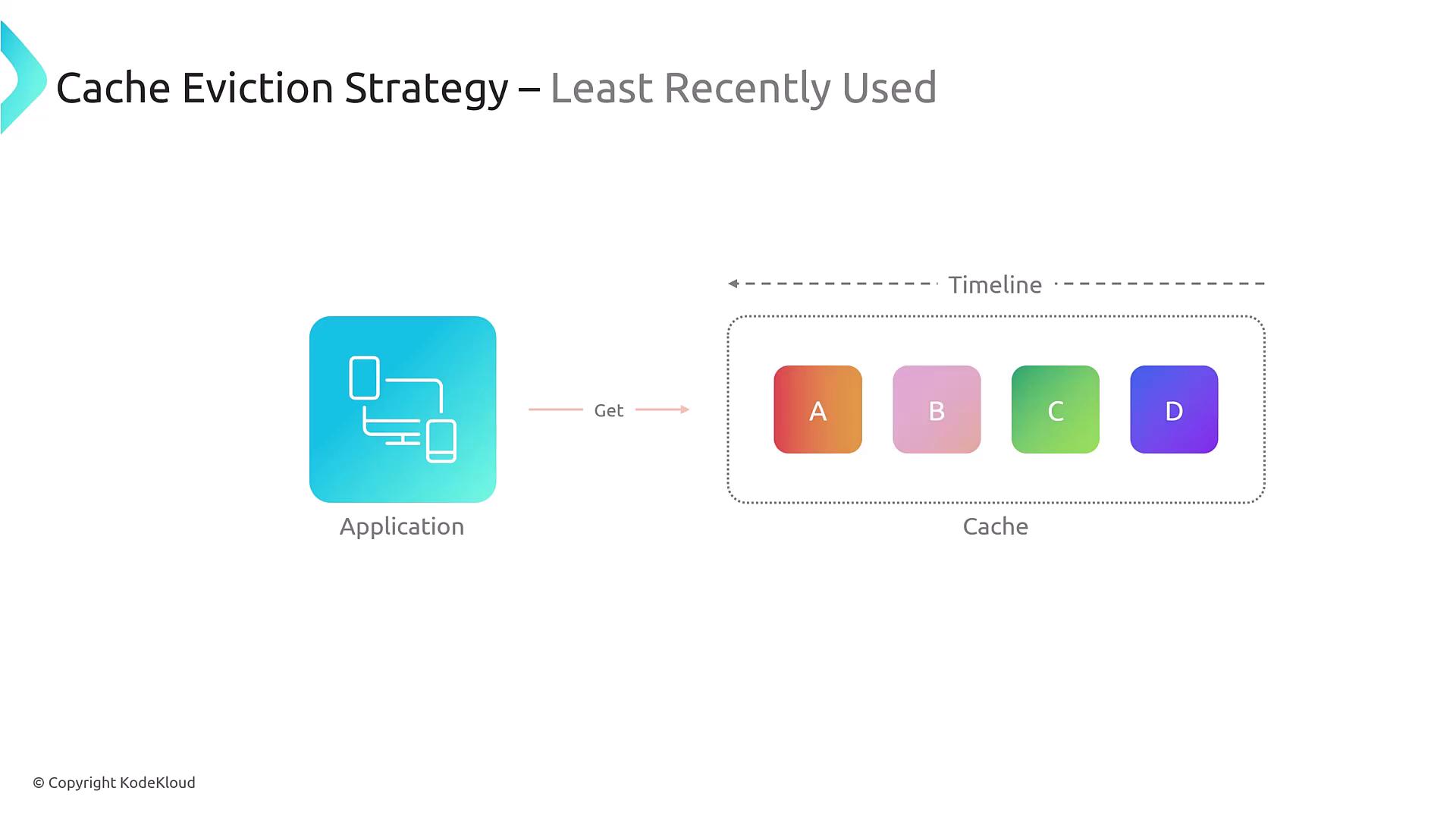

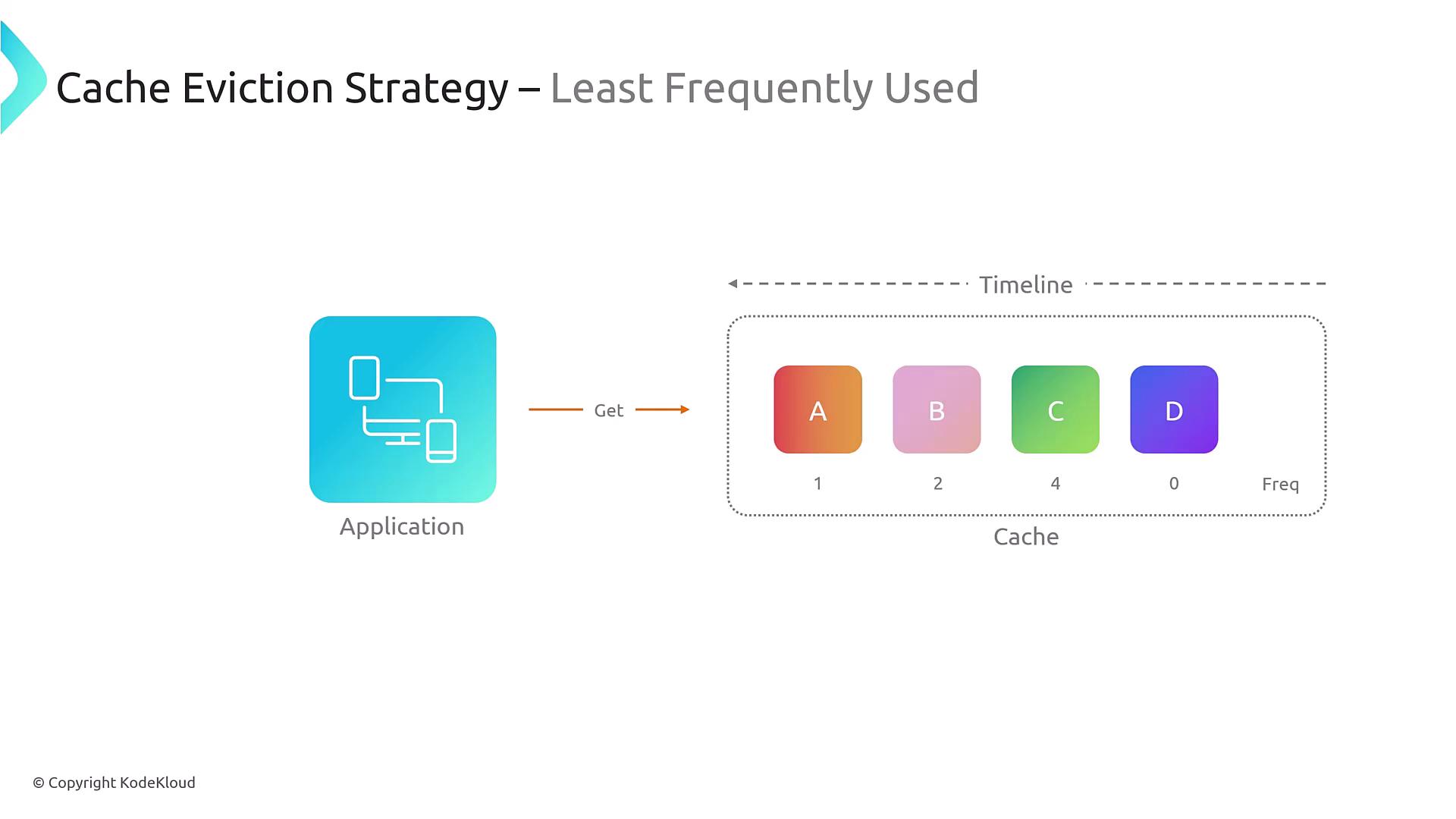

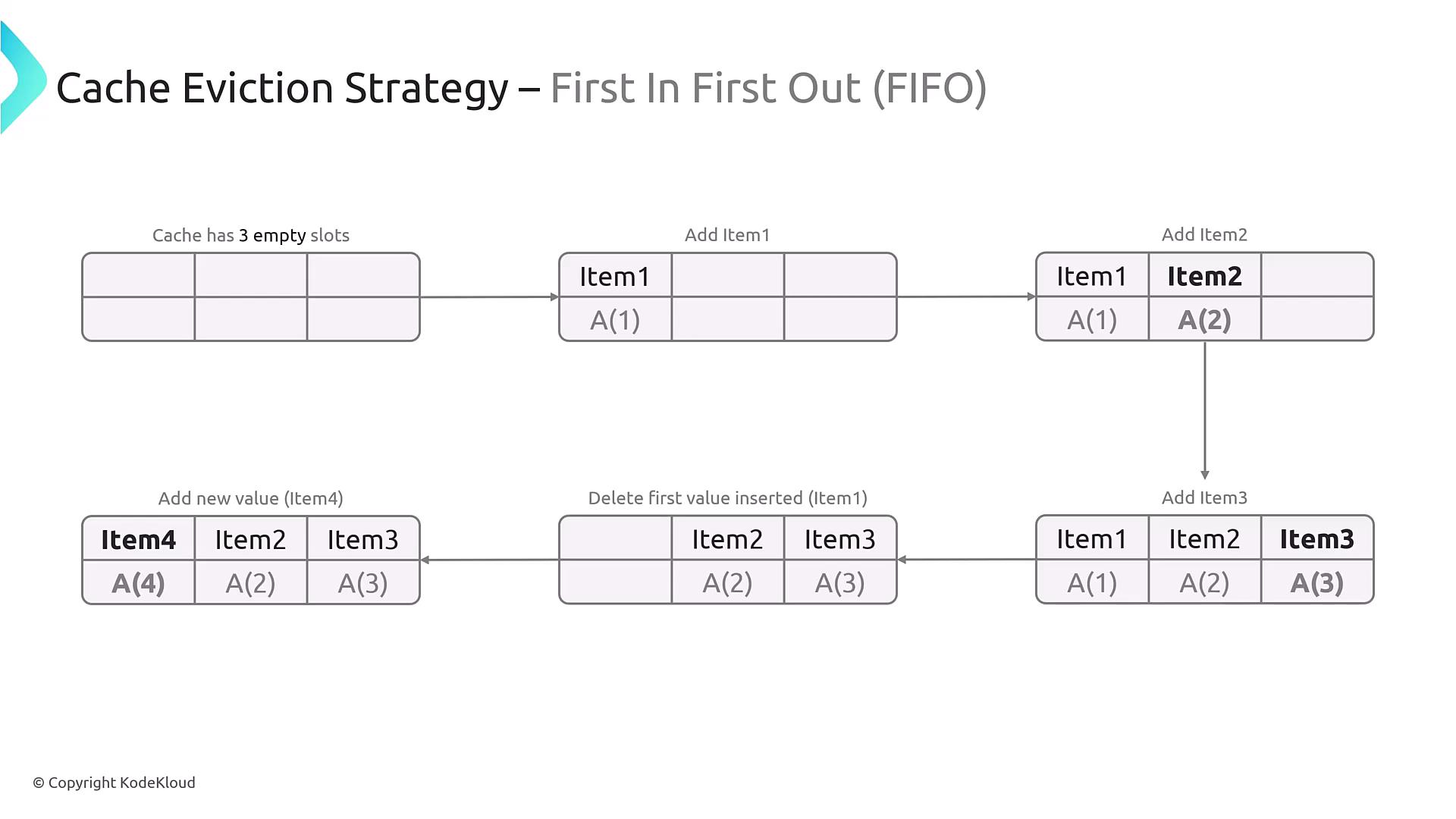

Cache eviction is the process of determining which data should be removed when the cache reaches its capacity. For example, if your cache has a capacity of 4GB, eviction policies help decide which cached items to discard to make space for new data. Popular eviction strategies include:- Least Recently Used (LRU): Evicts items that have not been accessed for the longest time.

- Least Frequently Used (LFU): Removes items that have the fewest accesses.

- First In, First Out (FIFO): Discards the oldest items in the cache to free up space.

When designing a caching strategy for your application, consider your data access patterns. Tools such as ElastiCache and Redis offer configurable eviction policies which can be adjusted based on your specific workload and performance needs.