Background and Evolution

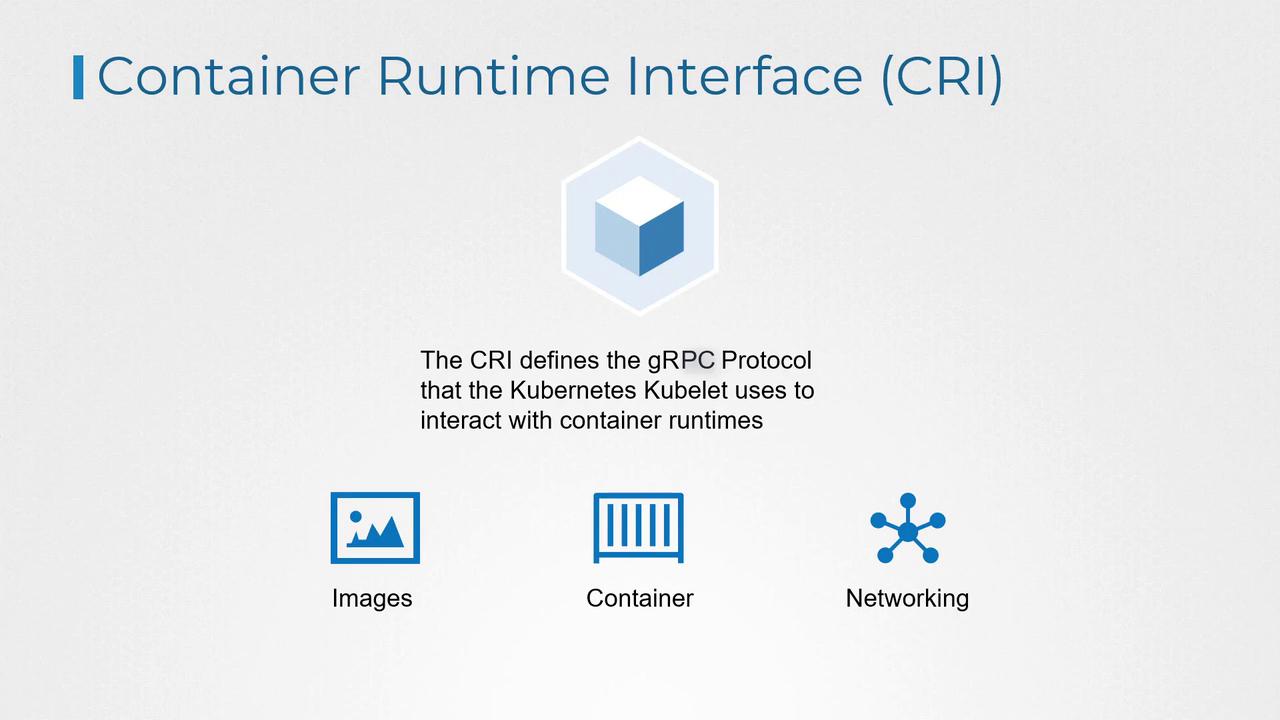

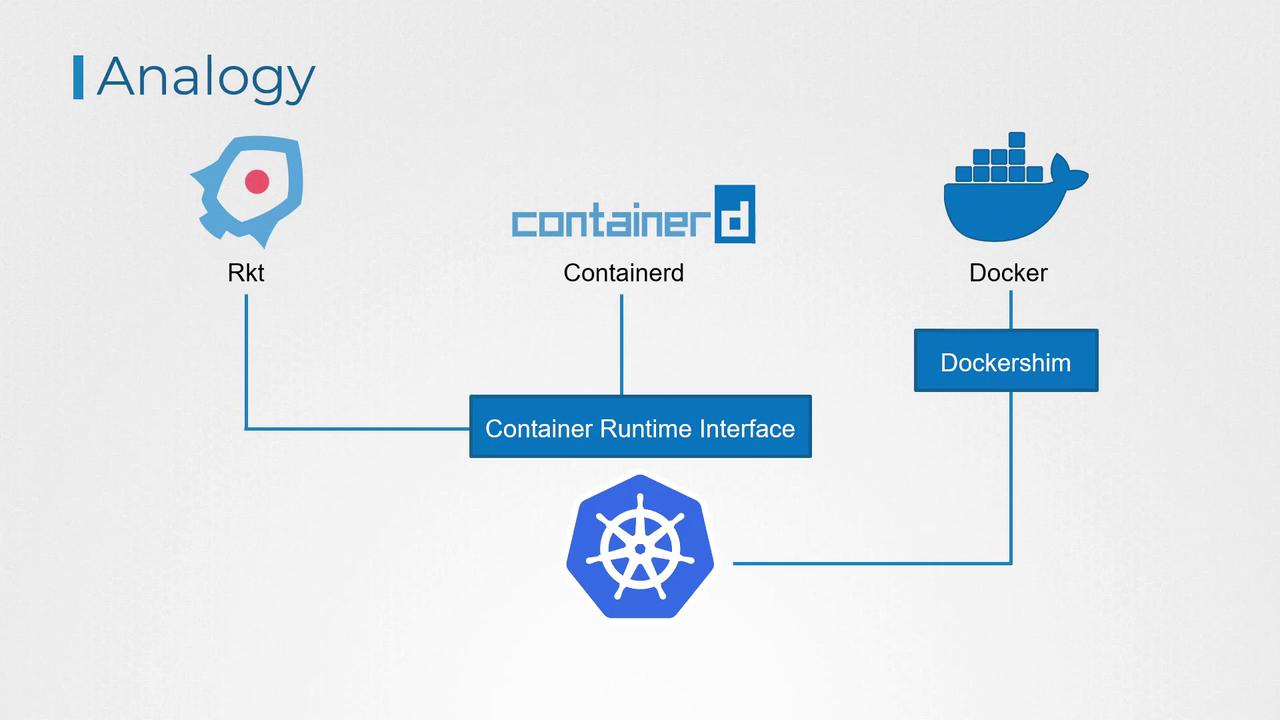

Initially, Docker became the most popular solution for container operations due to its simplicity. When Kubernetes was first introduced, it was designed to orchestrate Docker containers exclusively. However, as Kubernetes grew in popularity, new container technologies such as Rocket and ContainerD emerged that required integration with Kubernetes. To address this need, Kubernetes introduced the Container Runtime Interface (CRI). CRI defines a plugin interface that any container vendor can implement if they adhere to the Open Container Initiative (OCI) standards. This design involves a gRPC API used by the Kubernetes Kubelet to manage container images, containers, and networking. By implementing the CRI API, container runtimes can operate independently of Kubernetes, allowing system architects the flexibility to choose the optimal runtime for their environment.

For more details on container orchestration and Kubernetes, consider reviewing the Kubernetes Documentation.

Docker and the Introduction of Docker Shim

Docker’s widespread adoption in the container ecosystem meant that even as CRI was introduced, the Kubernetes community continued to support Docker. To maintain compatibility, Kubernetes implemented a temporary solution known as Docker Shim. This intermediary layer allowed Docker to communicate with Kubernetes without directly using the CRI, ensuring that existing Docker-based workflows continued to function seamlessly.

Embracing Container-Native CRI Support

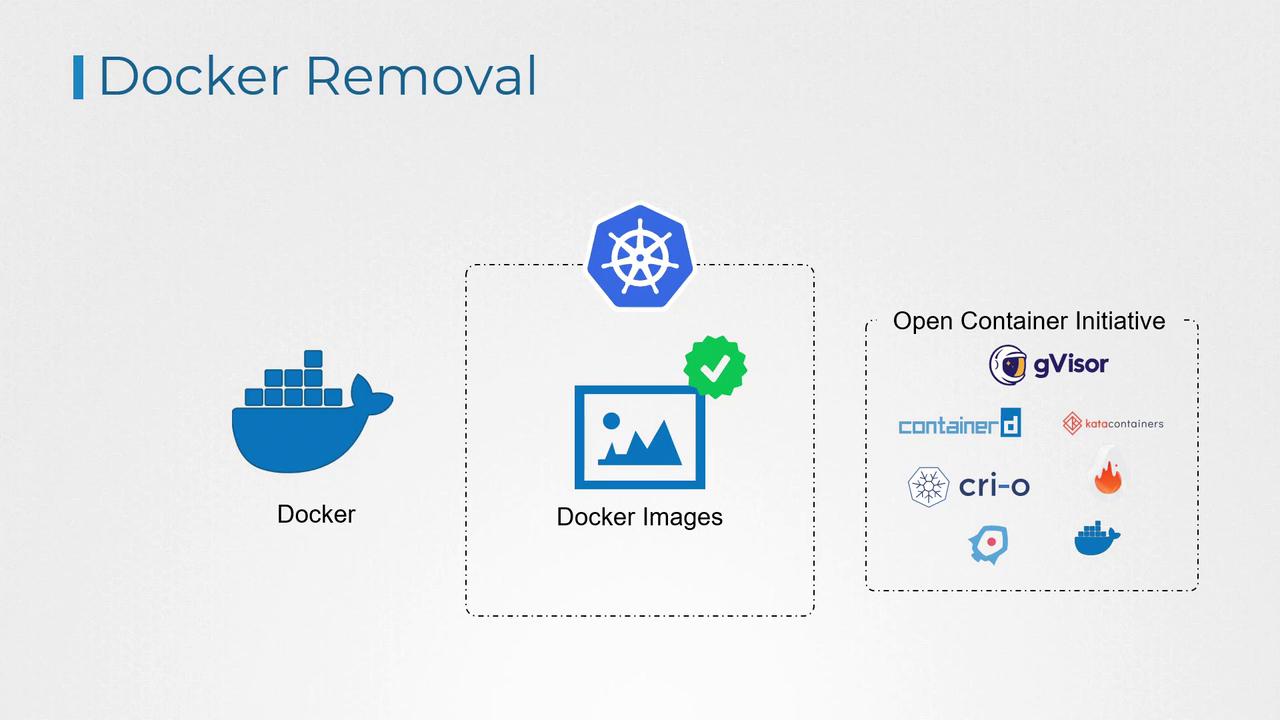

Users are now encouraged to adopt container runtimes that natively support the CRI. This shift not only enhances compatibility and standardization across container runtimes but also helps mitigate vendor lock-in. By supporting multiple container runtimes, Kubernetes enables organizations to choose the most appropriate solution for their infrastructure needs.

Explore further insights into container runtimes and Kubernetes best practices by visiting the Kubernetes Basics and other related resources.