|) operator.

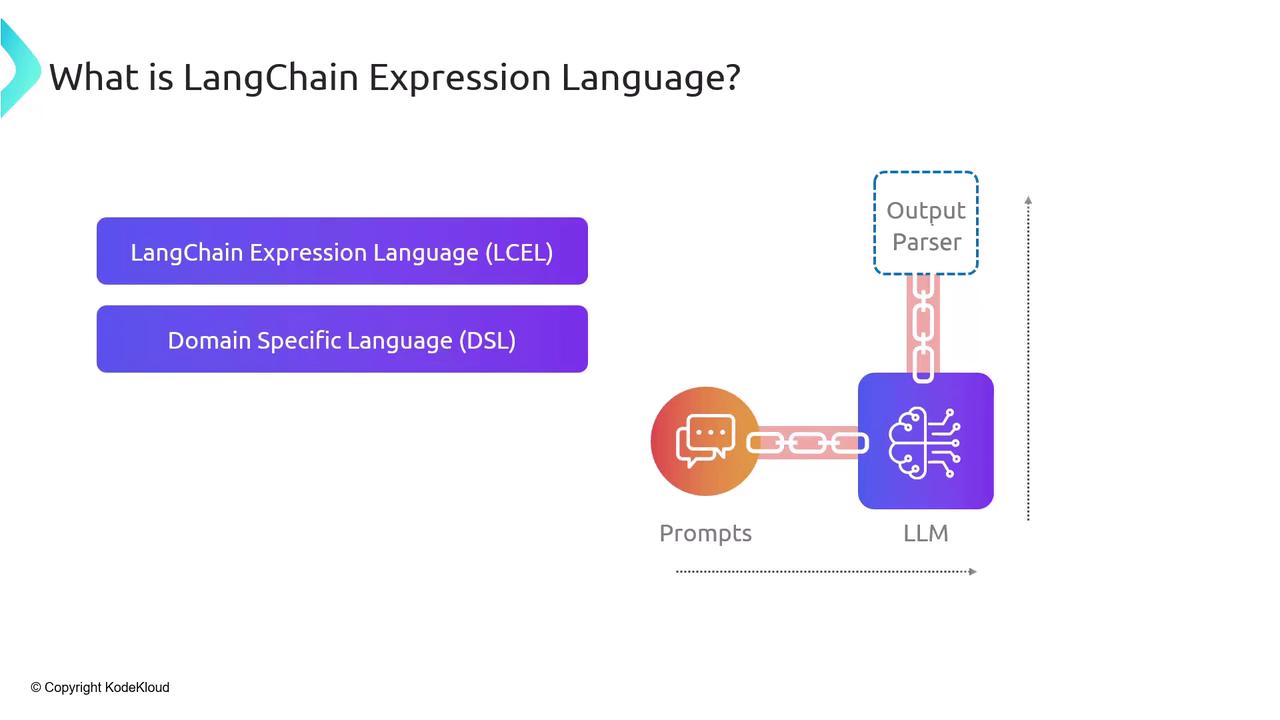

LCEL treats each LangChain component (prompts, LLMs, retrievers, parsers, etc.) as a stage in a logical pipeline. The output of one stage seamlessly becomes the input of the next, resulting in clean, modular code that’s straightforward to extend and debug.

|, LCEL builds an executable graph under the hood:

- Modularity: Swap, reorder, or reuse stages without rewriting the entire chain

- Readability: A visual, left-to-right flow similar to shell pipelines

- Extensibility: Integrate custom

Runnablecomponents, retrievers, or parsers

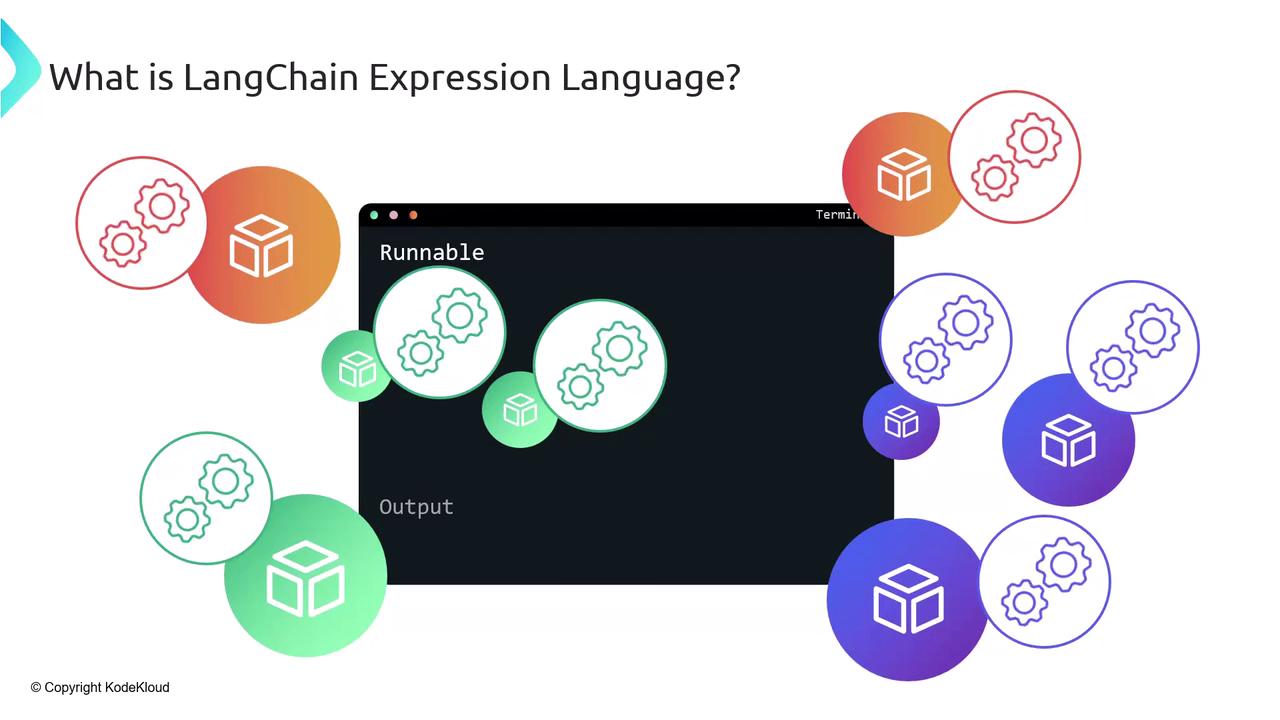

Runnable base class. By subclassing Runnable[InputType, OutputType], you define how a component:

- Receives input

- Processes its logic (e.g., calls an LLM or parses text)

- Emits output

Runnable ensures your custom components plug into LCEL pipelines without extra boilerplate.

Define your component by extending

Runnable and overriding the invoke method:| passes the output of one command to another:

cat file.txtstreams the file contentgrep "error"filters for “error” lineswc -lcounts matching lines

- Prompt formats the question

- LLM generates the answer

- Parser extracts the relevant output

Long, monolithic pipelines can be hard to debug. Break complex workflows into smaller sub-chains and reassemble them for clarity.

| Component | Role | Example |

|---|---|---|

| Prompt | Template for input formatting | PromptTemplate("Q: {text}") |

| LLM | Language model invocation | OpenAI(model="gpt-4") |

| Retriever | External data lookup (e.g., docs) | PDFRetriever(file_path="report.pdf") |

| OutputParser | Structured output extraction | RegexParser(pattern=r"Answer: (.*)") |

| CustomRunnable | User-defined processing logic | MyCustomProcessor() |

- Read the official LCEL documentation

- Explore the LangChain GitHub examples

- Try extending

Runnablewith your own custom processors