- Summarization: Batch summarization using the Create Stuff Documents Chain

- Retrieval: Retrieval-Augmented Generation (RAG) via the Create Retrieval Chain

1. Summarization with Create Stuff Documents Chain

The Create Stuff Documents Chain merges a list of document chunks into a single prompt and sends it to your LLM. This is ideal when your combined content stays within the model’s context window. Use cases:- Summarize multiple documents in one pass

- Extract specific insights across all inputs

Example: Batch Summarization

Ensure the total token count of your document chunks doesn’t exceed your model’s context limit. You can use the

tiktoken library to estimate tokens in advance.2. Retrieval with Create Retrieval Chain

For larger corpora that exceed a single prompt window, the Create Retrieval Chain combines a retriever with the Stuff Documents logic. This effectively implements a simple RAG workflow:- Retriever retrieves the most relevant chunks.

- Document Chain formats those chunks into a prompt.

- LLM generates the final answer.

Example: Simple RAG Pipeline

Always index your documents using the same embedding model you use at query time to ensure consistency in vector representations.

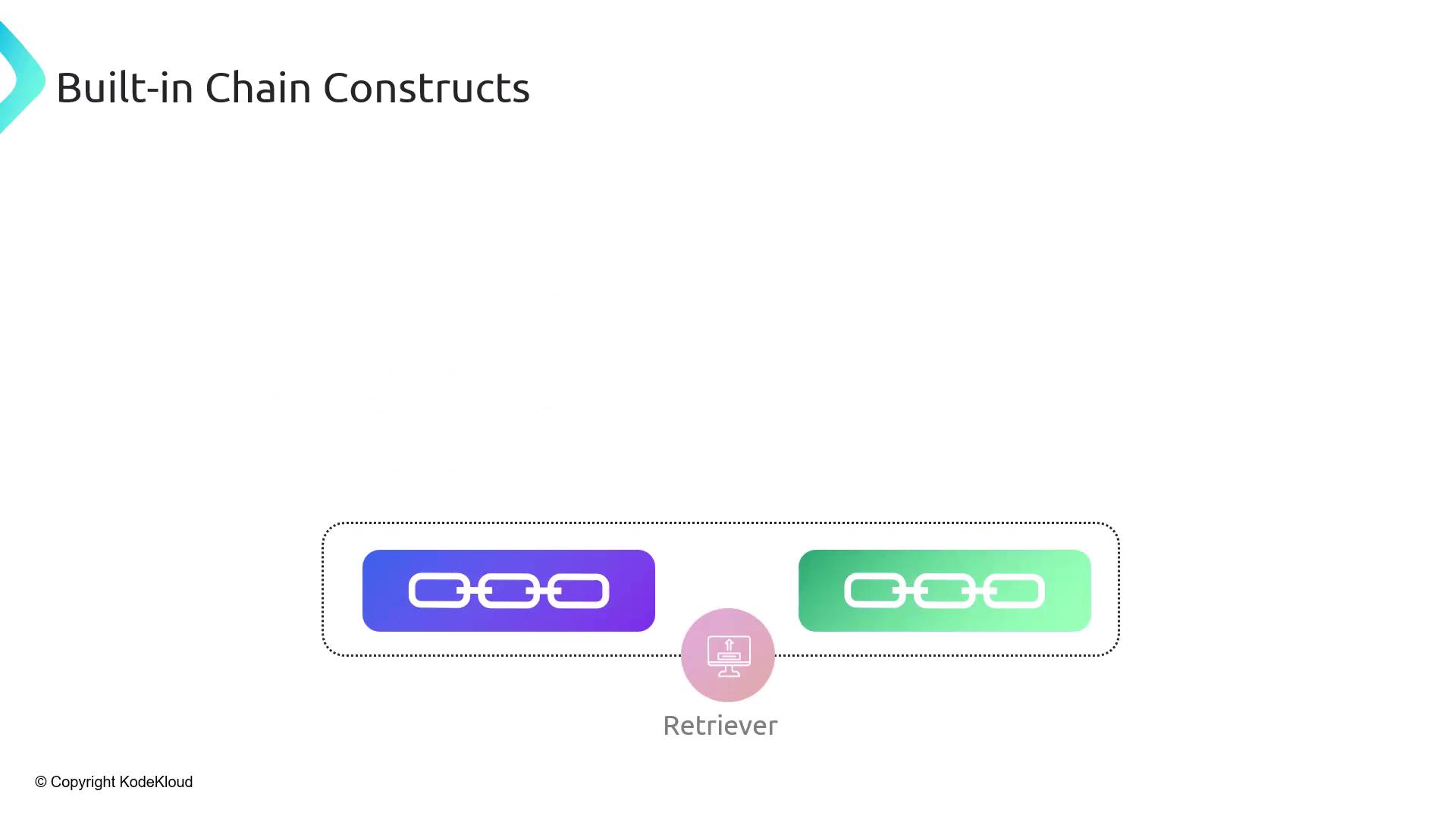

Comparison of Built-In Chains

| Chain Type | Use Case | Components | Key Benefit |

|---|---|---|---|

| Create Stuff Documents Chain | Batch summarization, multi-doc info extraction | LLM | Simple prompt stitching |

| Create Retrieval Chain | RAG over large corpora | Retriever + LLM + Document Chain | Scales beyond context window |