Revisiting Event-Driven Architecture

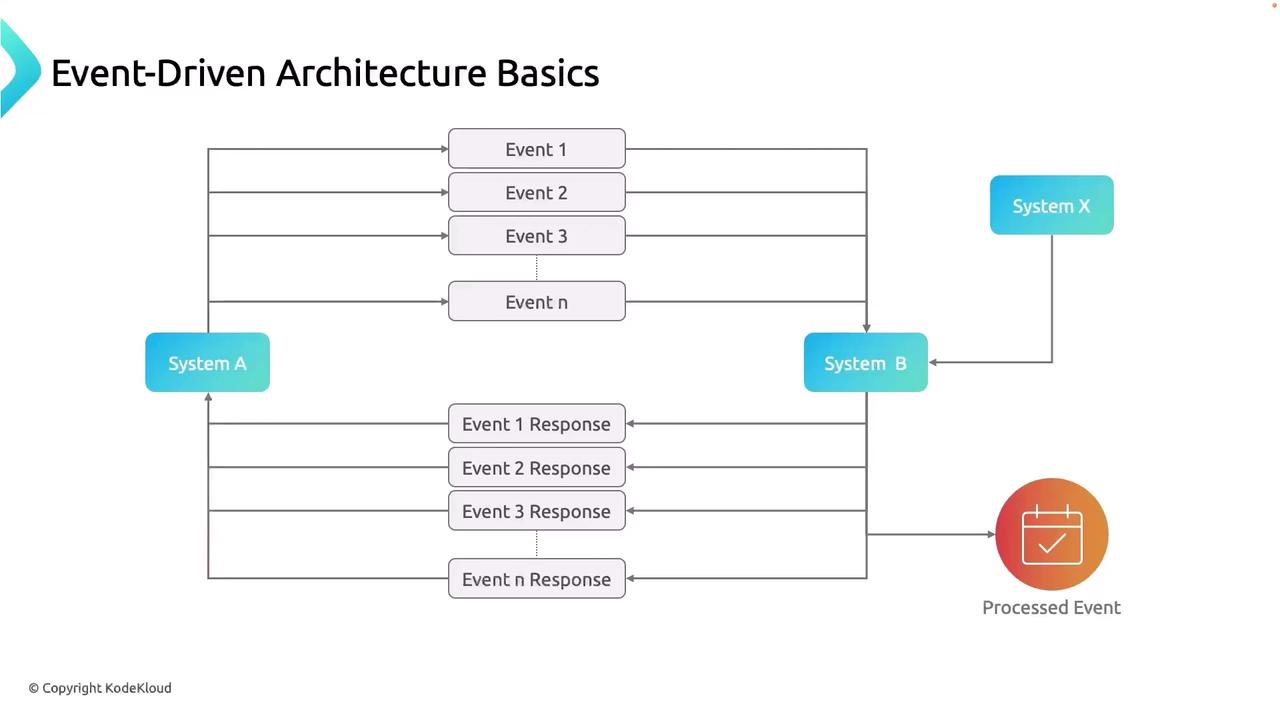

System A (a microservice, IoT device, or any event source) emits an event. System B consumes this event, transforms or enriches the data, and then produces a “processed event.” Downstream consumers—System X, System Y, etc.—leverage these processed events for storage, analytics, or triggering additional workflows. At high volume—hundreds or thousands of events per second—System B must process every message without dropping any and then reliably publish processed events. Optionally, acknowledgments or responses may flow back to System A or other services, completing a request–response cycle. Managing this complexity at scale can lead to overload, failures, or data loss. Let’s examine the pitfalls of a naïve event-driven setup before diving into how Kafka mitigates them.

Without a central event broker, direct service-to-service communication can lead to cascading failures under load.

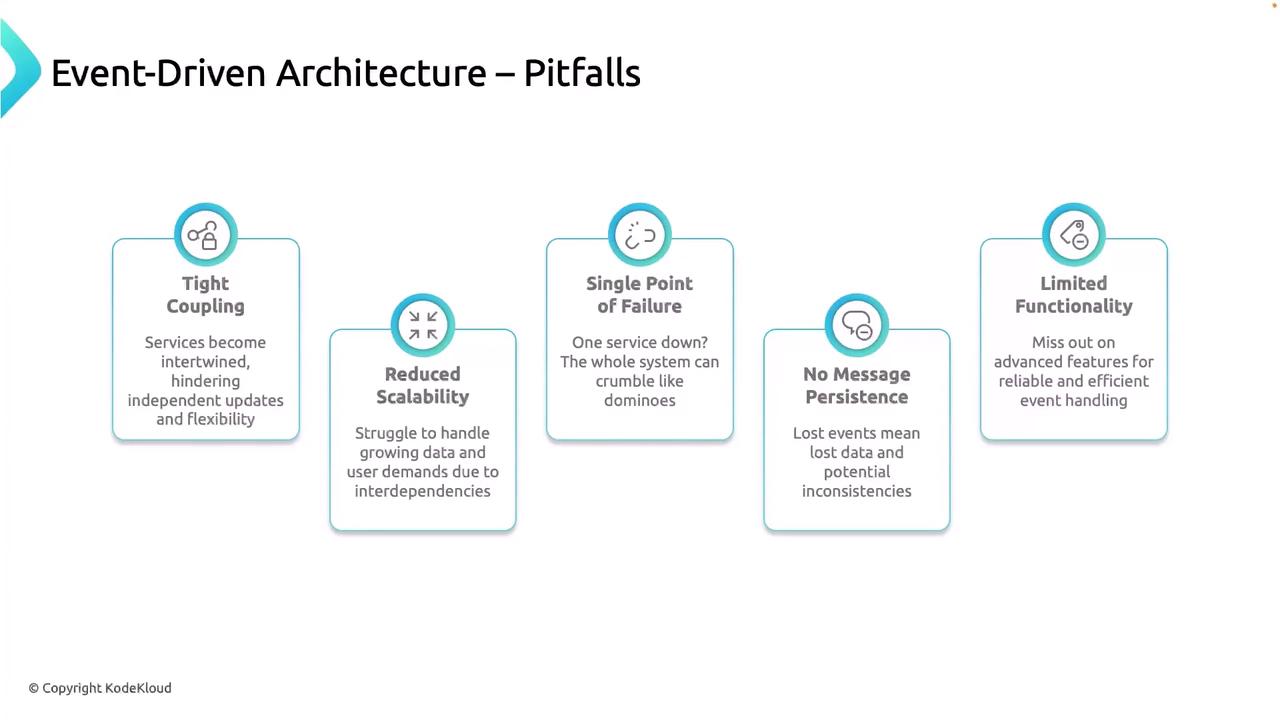

Common Pitfalls of Traditional EDA

| Pitfall | Impact | Real-World Example |

|---|---|---|

| Tight Coupling | Services share internal contracts, causing breaking changes when one service updates. | An e-commerce order service change breaks the payment service during a Black Friday surge. |

| Reduced Scalability | Dependencies prevent horizontal scaling, leading to performance bottlenecks under load. | Twitter faced massive latency when handling millions of tweets during the Super Bowl without decoupling. |

| Single Point of Failure | One component outage collapses the entire workflow. | If Netflix’s recommendation engine fails, users lose personalized suggestions—or streaming may be interrupted. |

| No Message Persistence | Events aren’t durably stored, risking data loss and inconsistency. | A trading platform that fails to persist trade events could incur regulatory penalties. |

| Limited Functionality | Lack of real-time analytics, exactly-once processing, or retry mechanisms. | FedEx’s tracking system without real-time updates or error recovery results in missed package alerts. |

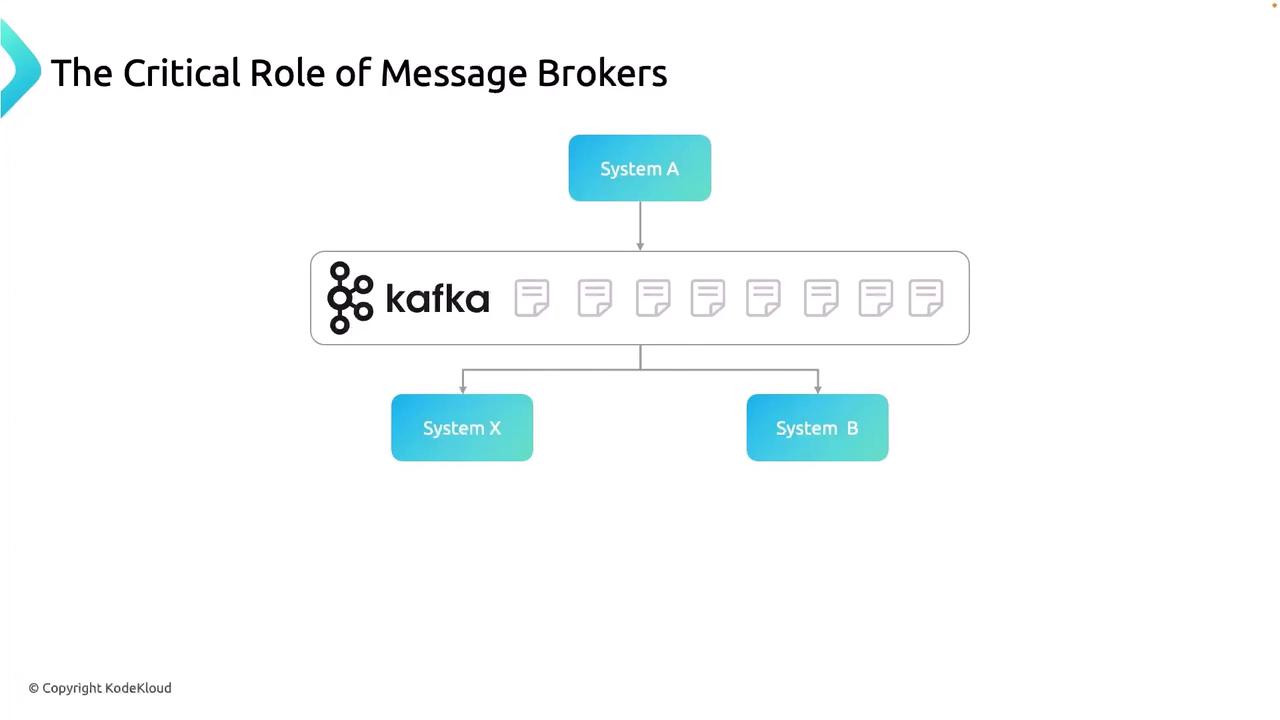

Introducing Kafka as the Message Broker

Instead of sending events directly between services, System A publishes to Apache Kafka—the central event hub. Once the event is in Kafka:- Producers are decoupled from consumers.

- Any number of consumers (System B, System X, or new services) can subscribe independently.

- Services don’t need to know about each other or the original source.

- Events can be replayed, audited, and retained for as long as needed.

Using Kafka topics as the primary communication channel simplifies scaling, error handling, and system evolution.

Why Kafka Excels: Key Features

-

High Throughput

Handles millions of messages per second with low latency, making it ideal for real-time data pipelines. -

Fault Tolerance

Distributed architecture with replicated partitions ensures no single point of failure. -

Scalability

Add brokers or partitions on the fly to meet increasing demand without downtime. -

Real-Time Processing

Low-latency streaming enables immediate analytics, monitoring, and event-driven workflows.