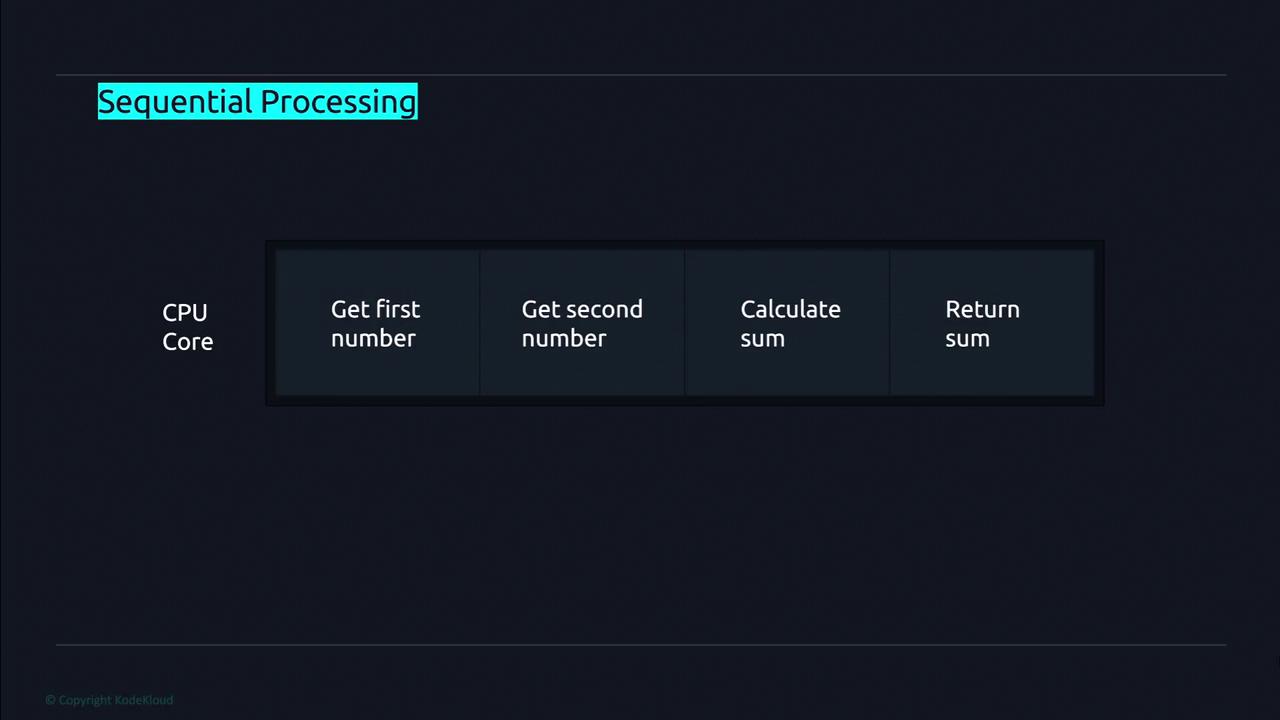

Sequential Programming

Sequential programming executes instructions one after another in a strict linear order. Each operation must finish before the next one begins. For instance, consider a simple program that calculates the sum of two numbers. The program might follow these steps:- Request the first number from the user.

- Request the second number from the user.

- Compute the sum of the two numbers.

- Return the result.

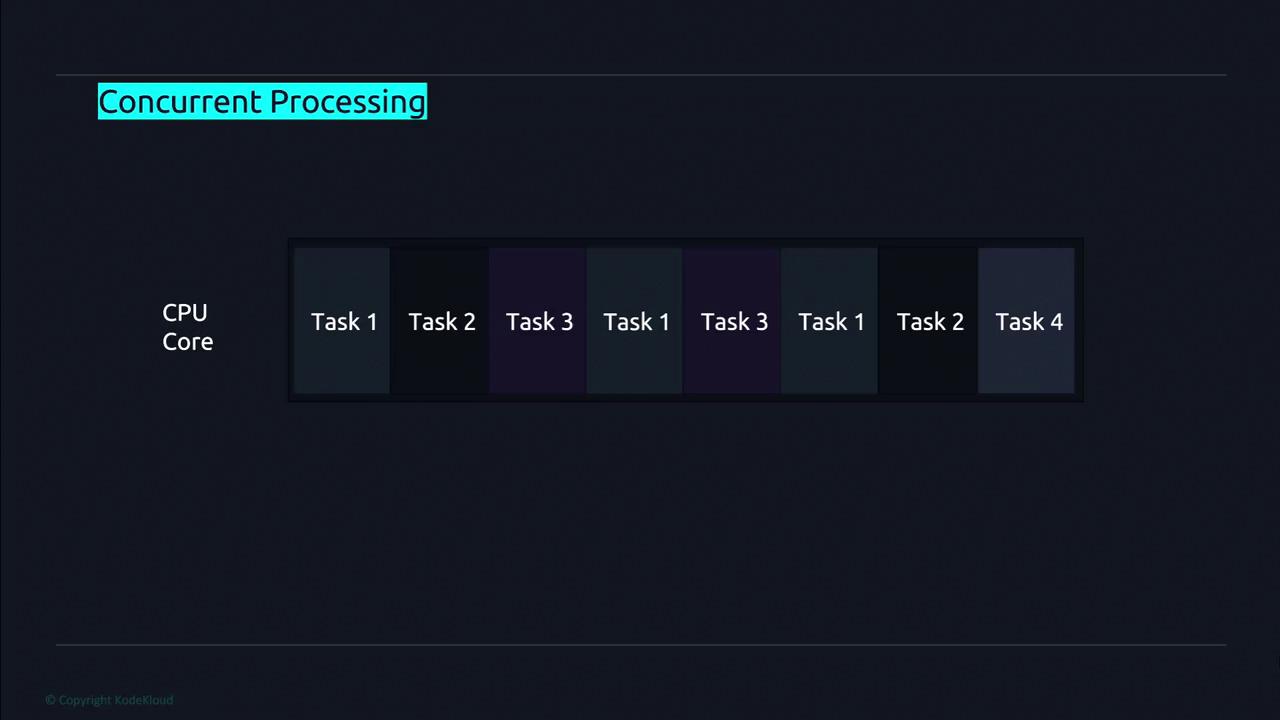

Multitasking

Modern CPUs enhance sequential execution by employing multitasking. Even on a single-core processor, the CPU rapidly switches between multiple tasks using small time intervals, which gives the illusion of performing several tasks simultaneously.

Understanding Concurrency

Concurrency refers to the ability to have multiple tasks or processes in progress at the same time. This is primarily achieved through multitasking, where tasks are interleaved on a processor. Note that concurrency is about managing multiple tasks, not necessarily executing them simultaneously.Concurrency enables more responsive applications by efficiently managing several tasks at once, even if they do not run in parallel.

Concurrency in Multicore CPUs

In multicore systems, the benefits of concurrency are amplified. For example, in a system with two cores and two tasks, each core can manage both tasks by switching between them, thereby maximizing the overall processing power. This approach, which leverages multiple cores to handle tasks concurrently, is known as multiprocessing.

Concurrency vs. Parallelism

It’s important to distinguish between concurrency and parallelism:- Concurrency involves managing multiple tasks at once, often through rapid task switching. An example is a text editor that lets you type and save a file simultaneously.

- Parallelism uses multiple processing units to execute tasks simultaneously. A distributed data processing system that divides tasks across multiple clusters is a typical example of parallel processing.

While concurrency improves the efficiency and responsiveness of applications, parallelism takes advantage of hardware by executing tasks simultaneously across multiple cores or processors.