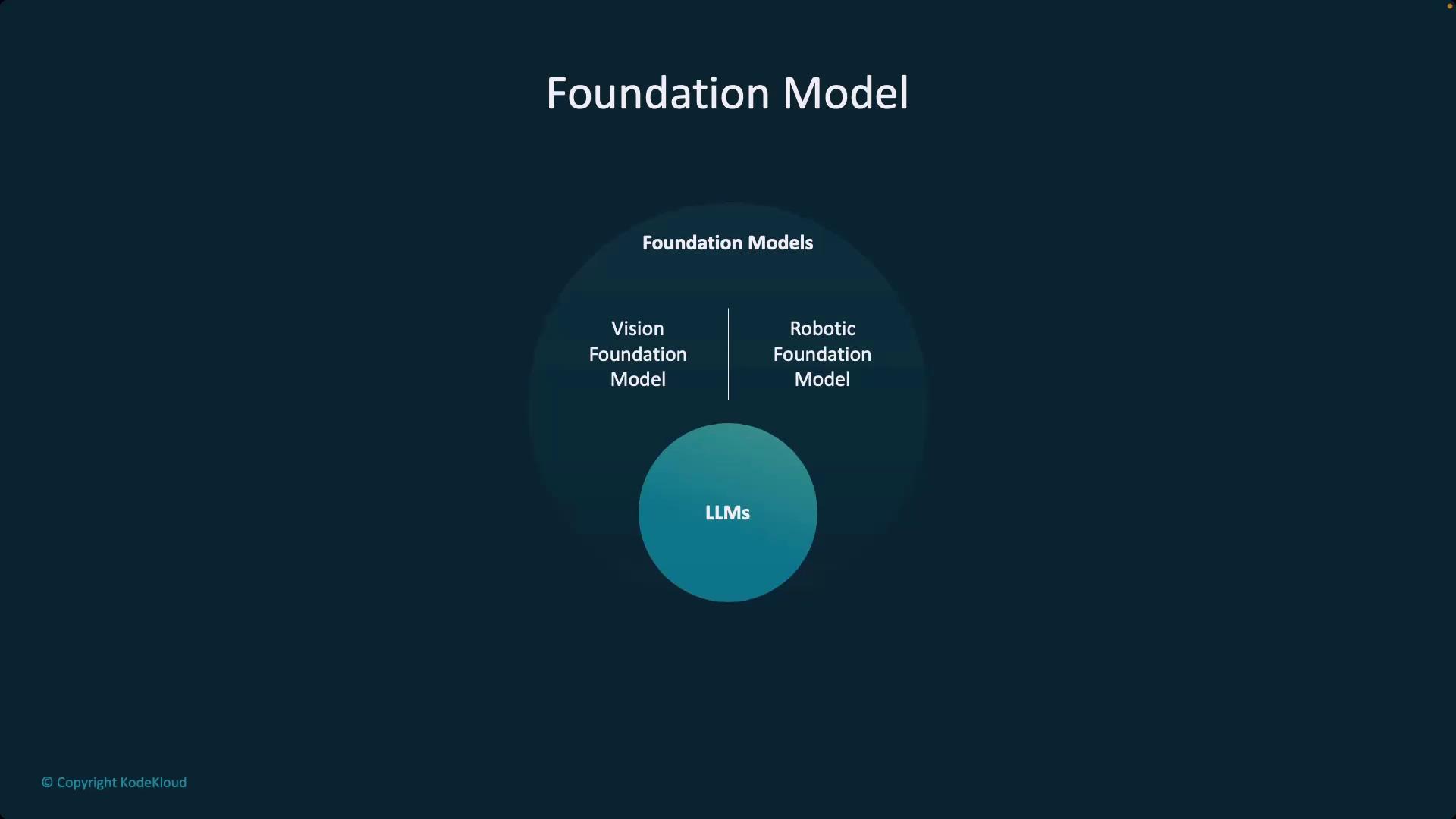

Foundation Models and LLMs

Foundation models are large-scale models pre-equipped with versatile capabilities that can be fine-tuned for a variety of tasks. Examples include text generation models (referred to as large language models), image recognition systems, speech processing algorithms, and even multimodal models that can handle diverse inputs and outputs.

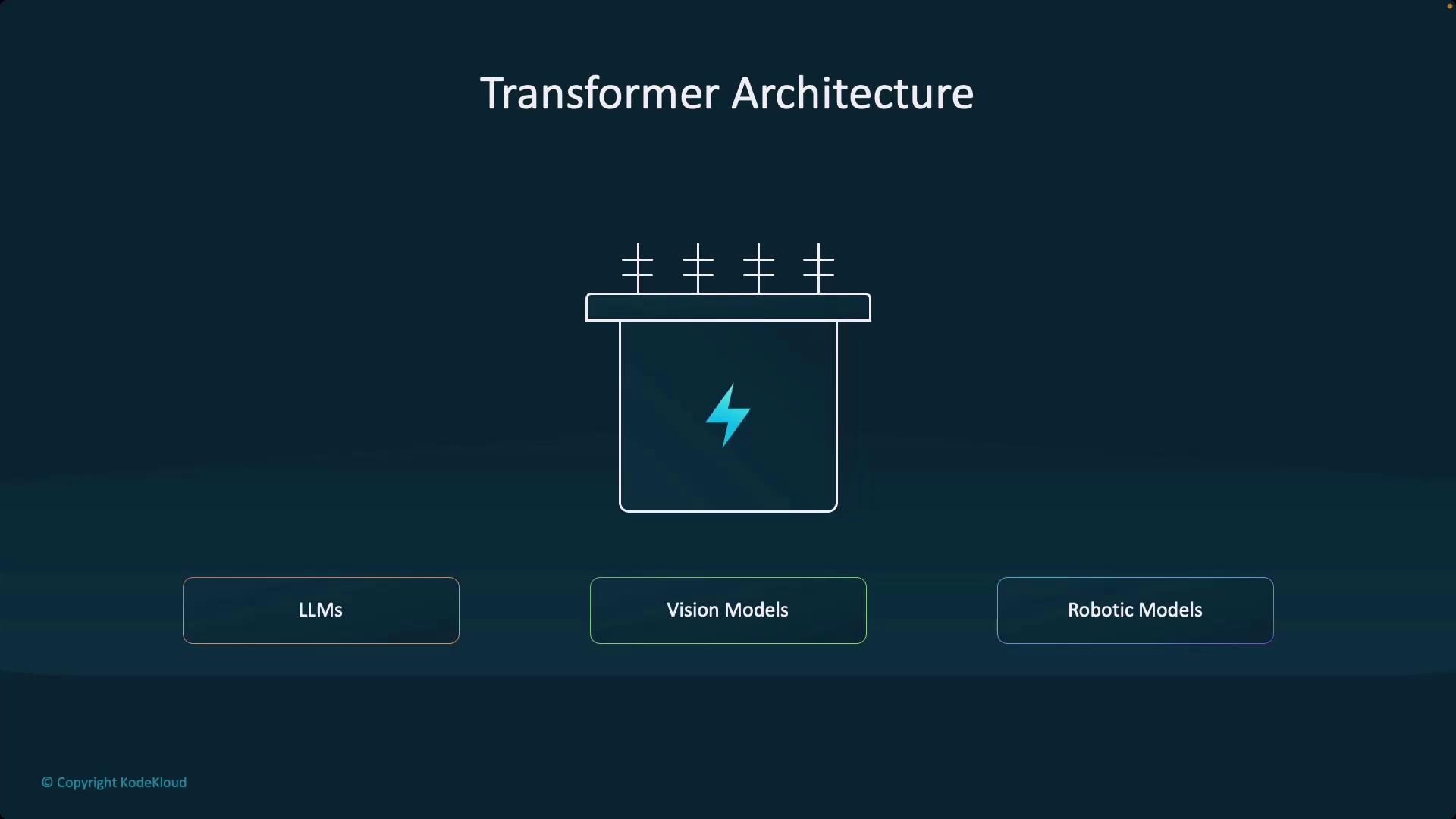

Transformer Architecture: The Driving Force Behind LLMs

At the core of these systems is the groundbreaking transformer architecture. This innovation has revolutionized deep neural networks, enabling models to process and learn from complex patterns much like brain tissue. Not only are transformers integral to LLMs, but they also power robust vision and robotic models.

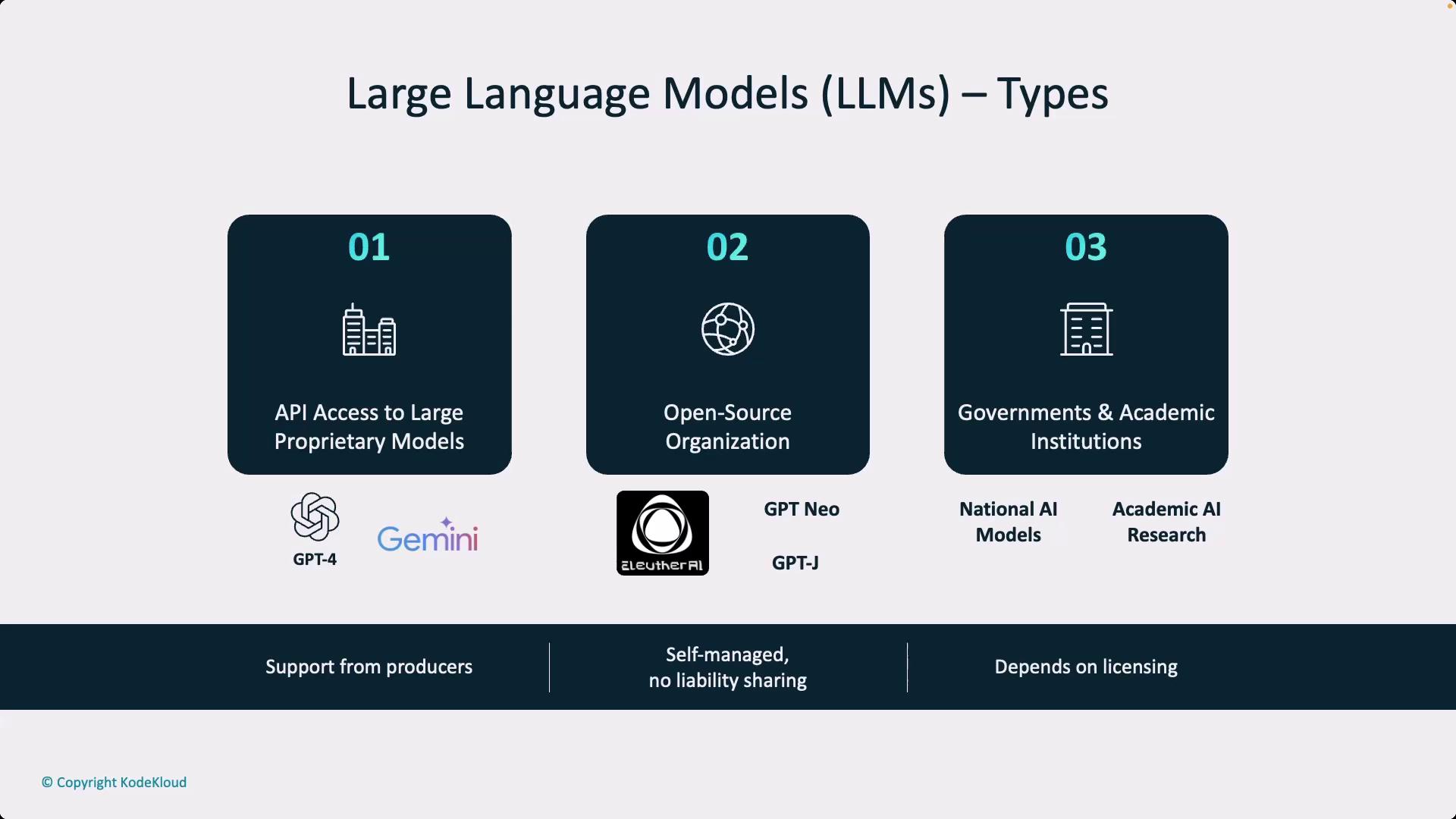

There are three primary approaches to consuming these models:

1. Proprietary Models

These include GPT-4.0, Gemini, Tropics, and more. Typically accessed via APIs, they offer high-level functionality while keeping the intricate details (such as full training data transparency) under wraps. This controlled exposure ensures developers can leverage these models without needing extensive internal knowledge.2. Open-Source Models

Examples such as GPT Neo, GPT-J, and other models from Eleuther AI provide complete visibility into their code, datasets, and weights. This transparency enables deep customization and understanding. Open-source models are often supported by government bodies, academic institutions, or community organizations.3. Government and Academic Models

These models are developed with backing from public institutions and come with structured support for product deployment, licensing, and continuous updates. Their role may evolve as the market matures, but they remain essential in production environments.

Ensure you consider licensing implications in any production deployment. Many models are distributed under copyleft licenses that may restrict specific usages, such as fine-tuning one model with another. API-based models and their limitations also require careful review.

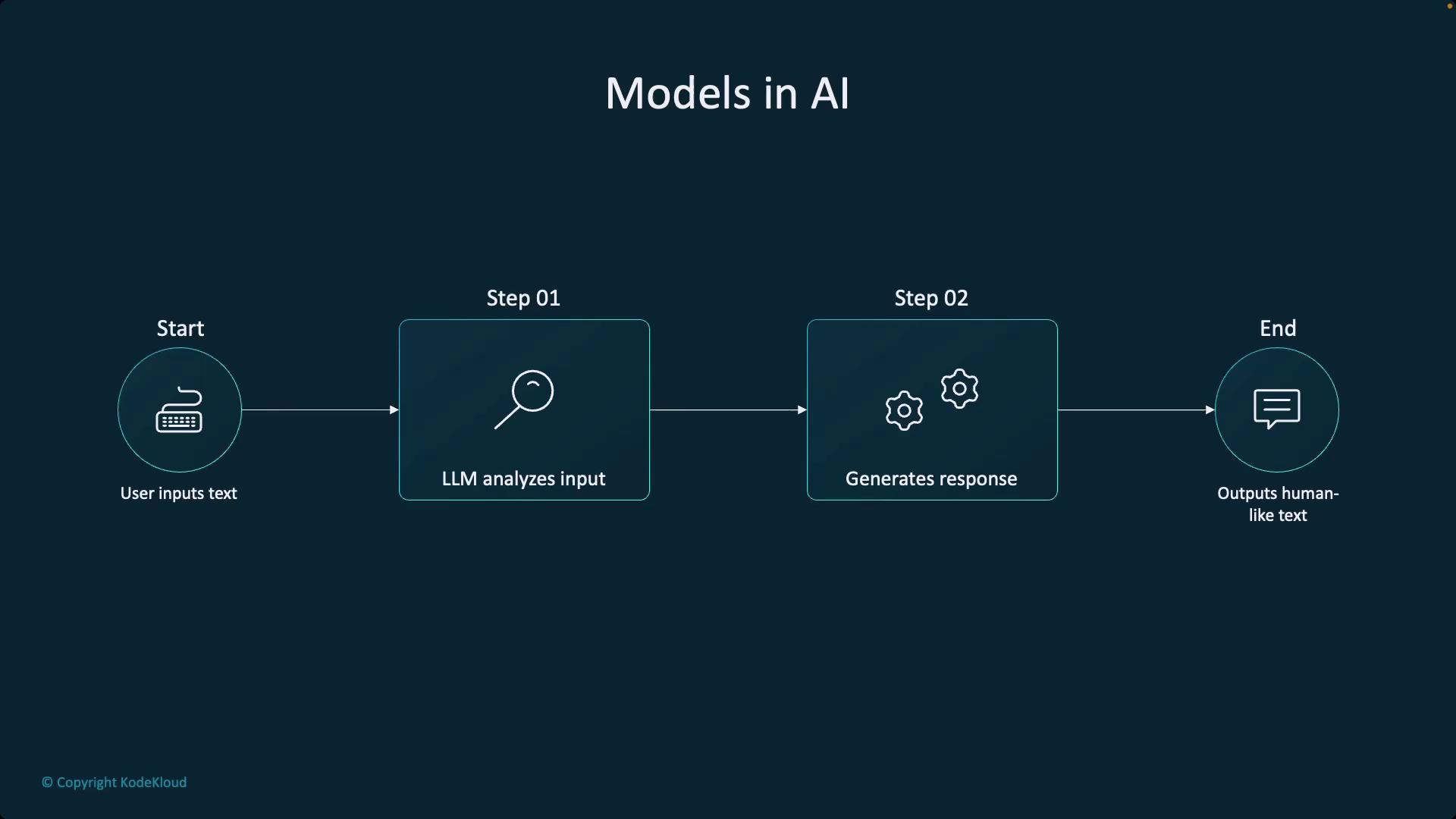

The Anatomy of an AI Model

At its core, an AI model is a mathematical system that processes inputs to yield outputs based on learned patterns. Whether it is a simple regression model or a sophisticated transformer-based system, the fundamental input-output mechanism remains constant. In a transformer-based LLM, the typical stages include:- A user inputs text.

- The model processes and analyzes the input.

- It generates a corresponding response.

- The response is delivered back to the user.

- Syntax: Ensuring that generated text follows proper grammatical structures.

- Semantics: Capturing and conveying the underlying meaning.